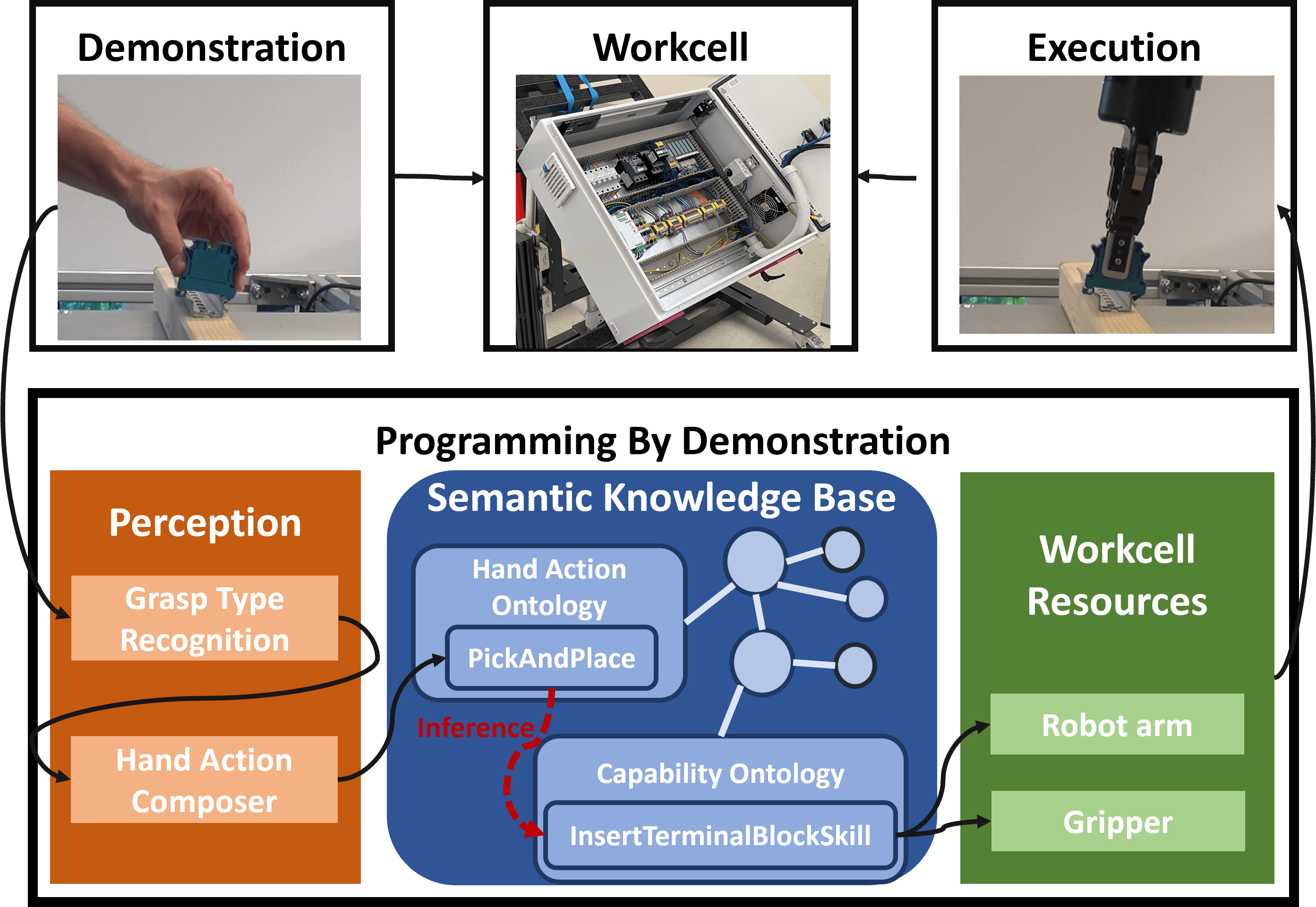

In this paper, we introduce a knowledge-based Programming by Demonstration (kb-PbD) paradigm to facilitate robot programming in small and medium-sized enterprises (SMEs).

PbD in production scenarios requires the recognition of product-specific actions but faces challenges in the lack of suitable and comprehensive datasets, due to the large variety of involved hand actions across different production scenarios.

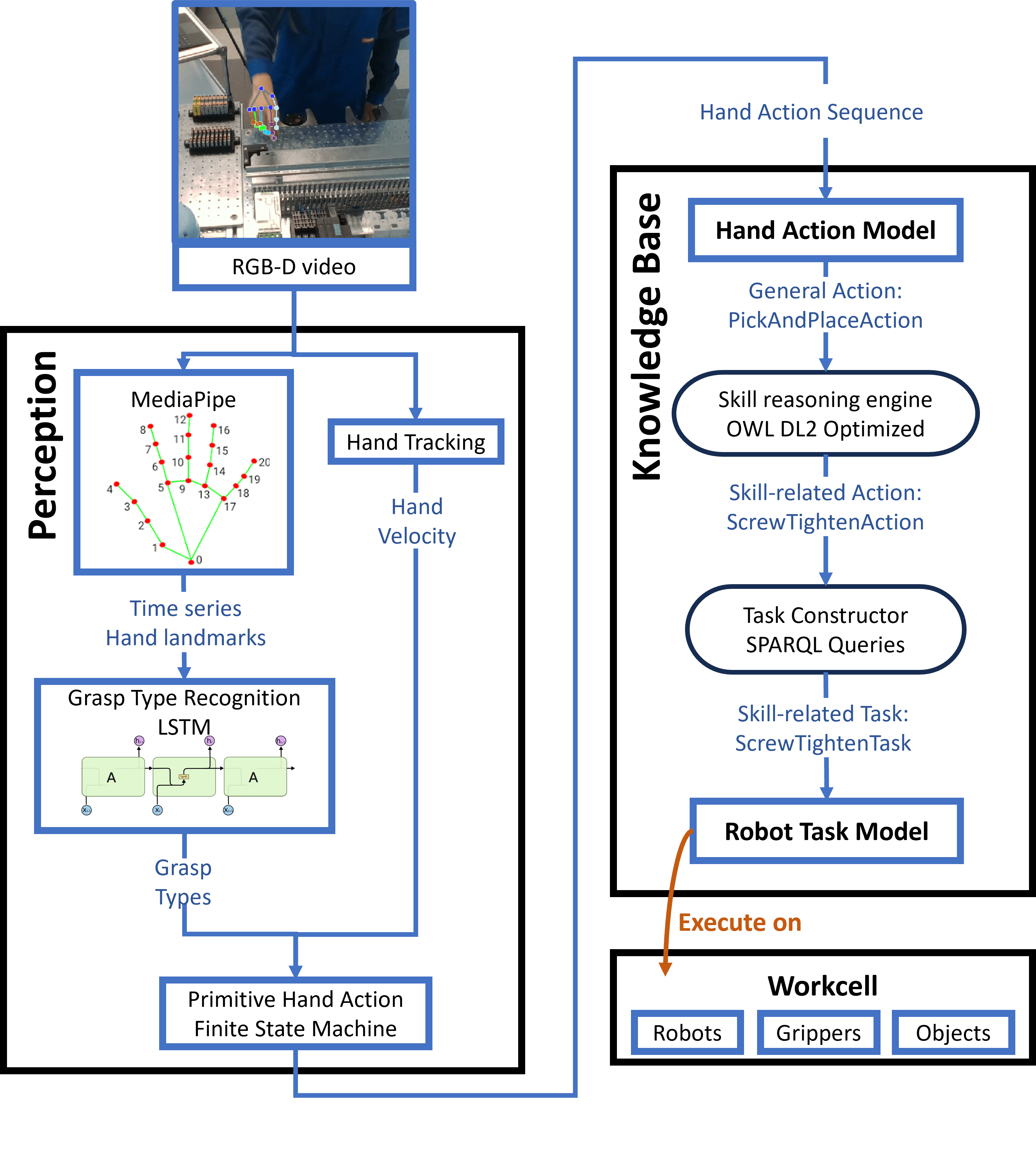

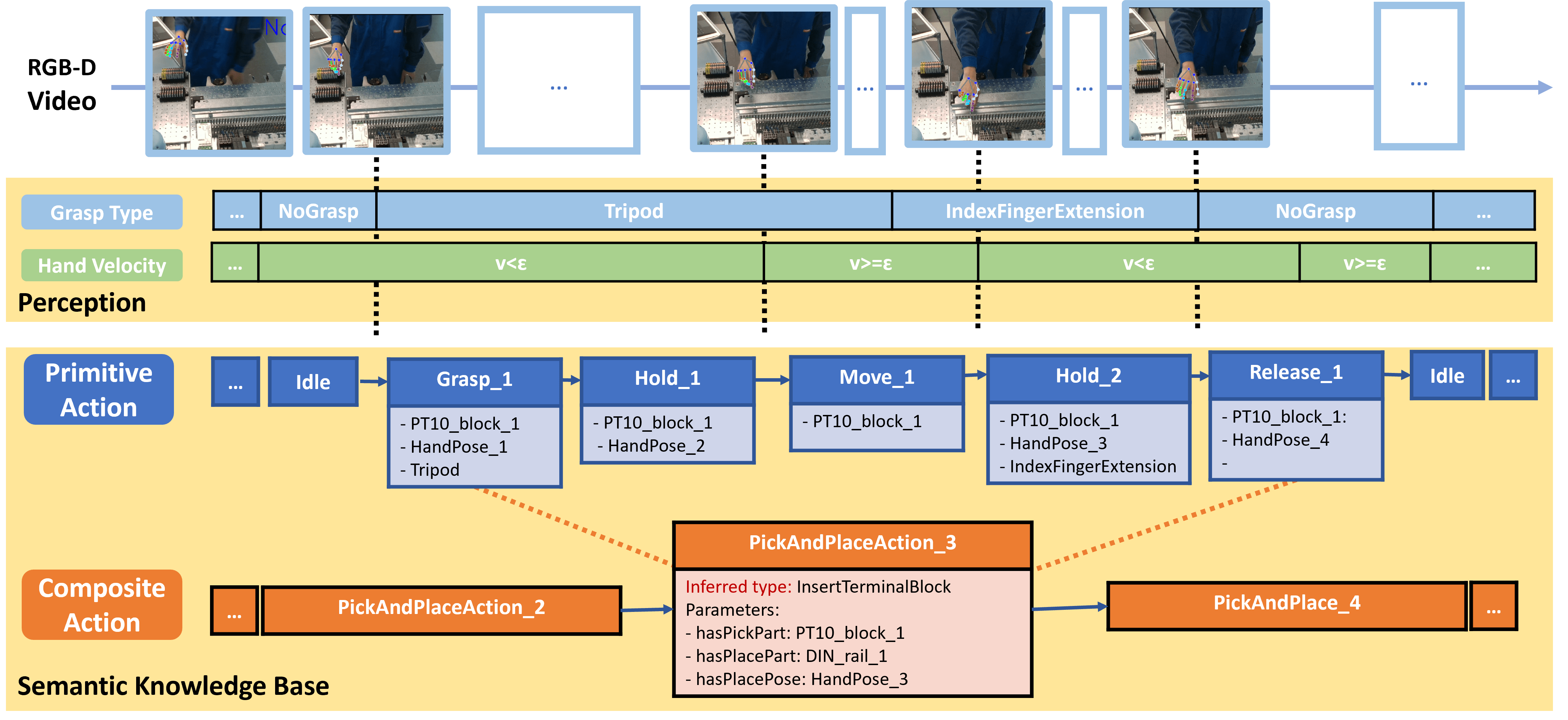

To address this issue, we utilize standardized grasp types as the fundamental feature to recognize basic hand movements, where a Long Short-Term Memory (LSTM) network is employed to recognize grasp types from hand landmarks.

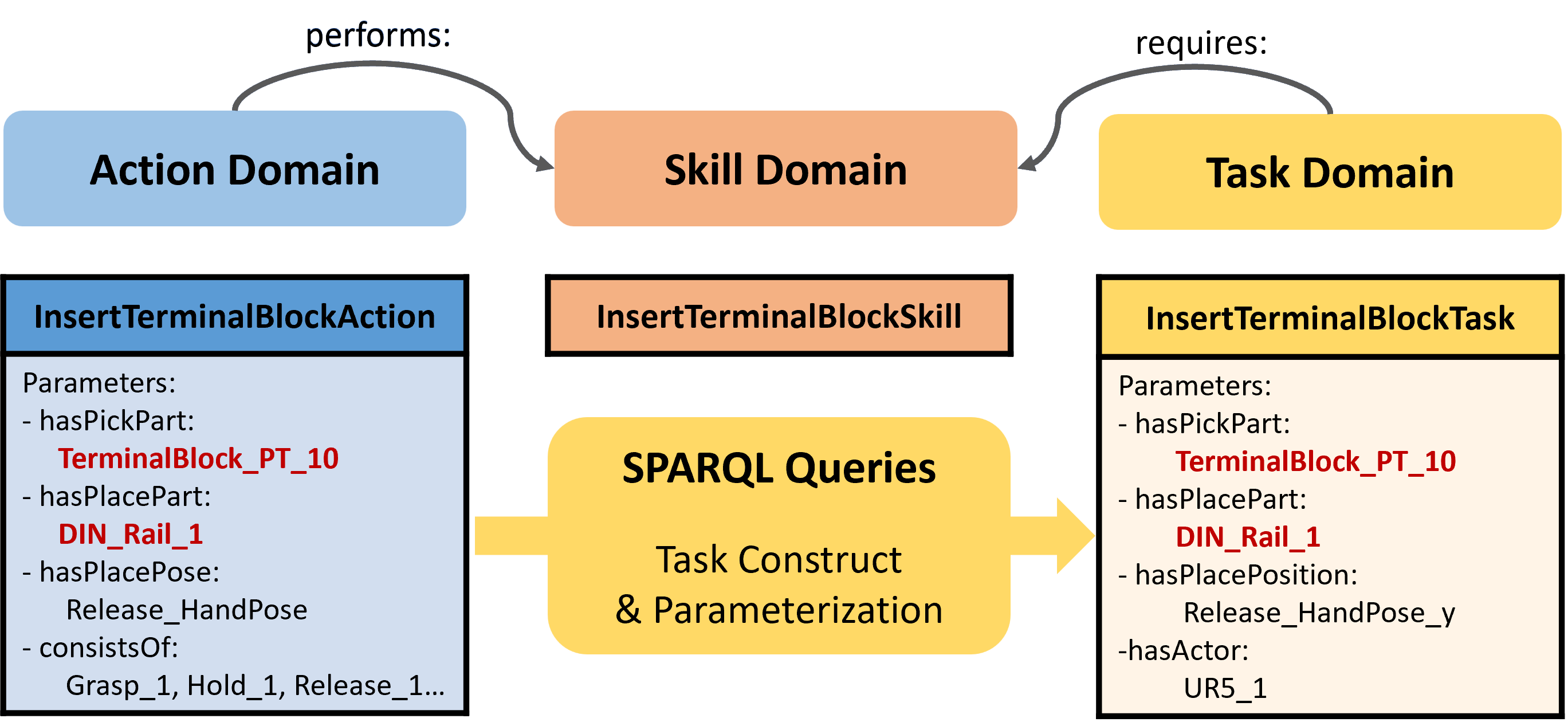

The product-specific actions, aggregated from the basic hand movements, are formally modeled in a semantic description language based on the Web Ontology Language (OWL).

Description Logic (DL) is used to define the actions with their characteristic properties, which enables the efficient classification of new action instances by an OWL reasoner.

The semantic models of hand actions, robot tasks, and workcell resources are interconnected and stored in a Knowledge Base (KB), which enables the efficient pair-wise translation between hand actions and robot tasks.

For the reproduction of human assembly processes, actions are converted to robot tasks via skill descriptions, while reusing the action parameters of involved objects to ensure product integrity.

We showcase and evaluate our method in an industrial production setting for control cabinet assembly.